Canalys is part of Informa PLC

This site is operated by a business or businesses owned by Informa PLC and all copyright resides with them. Informa PLC’s registered office is 5 Howick Place, London SW1P 1WG. Registered in England and Wales. Number 8860726.

An RSAC 2024 takeaway: vendors compete for mindshare with AI beauty contest

At the recent RSA Conference in San Francisco, gen AI tools took the spotlight as cybersecurity vendors showcased new possibilities for operationalizing this technology. As the industry faces escalating cyber threats, operationalizing gen AI assistants offers a promising avenue for enhancing security resilience and optimizing existing cybersecurity technologies. But it is a race against time against an emerging underground AI developer community.

Gen AI tools and use cases were front and center of many vendors’ announcements and demonstrations at this year’s RSA Conference (RSAC) in San Francisco. A year from the initial excitement of gen AI’s potential benefits for the cybersecurity ecosystem and fears of its malicious use, vendors came to the event to showcase how the technology can be operationalized. Gen AI assistants for security teams were demonstrated by numerous vendors including, among others, CrowdStrike (Charlotte AI), Fortinet (FortiAI), Google (Gemini in Security) and SentinelOne (Purple AI), while vendors like Palo Alto Networks (Precision-AI) and Trend Micro (Vision One) made timely announcements on broader AI-driven platform advancements. This follows Microsoft’s commercial launch in April of its Copilot for security offering, which was also showcased at RSAC.

More than gimmicks and AI assistants

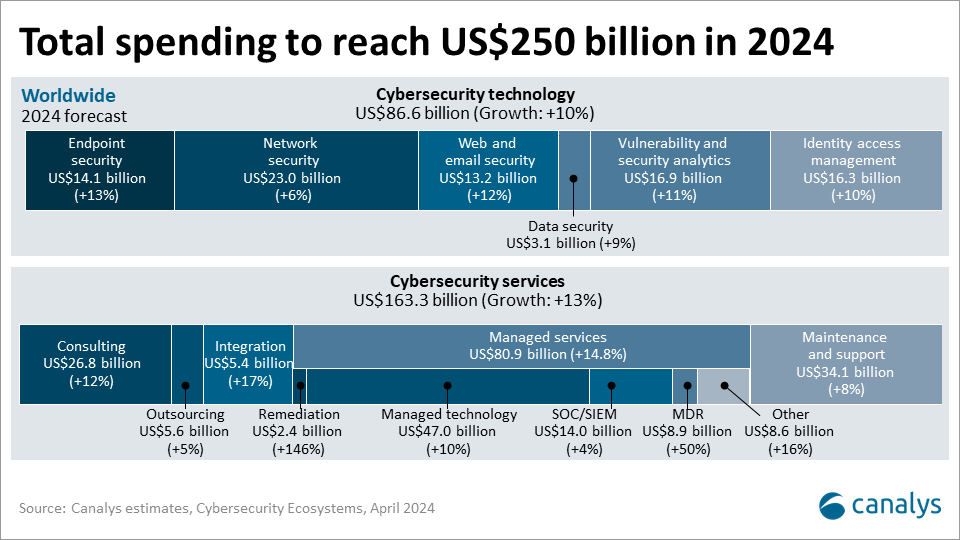

The emergence of gen AI tools comes at a pivotal point in time for the cybersecurity industry. Spending on technologies and services to identify, protect, detect and respond to attacks continues to increase. Canalys forecasts total cybersecurity spending will grow by 12% this year to reach US$250 billion.

However, successful ransomware attacks are growing faster. Publicly disclosed attacks surged by 96% in the first four months of 2024, compared to the same period in 2023, continuing a worrying trend after a 68% increase the previous year. High-profile victims so far this year include Claro, First American, Hyundai, Norsk Hydro and Subway. This trend is unsustainable. Convincing customers to increase spending further will be challenging if progress is not seen to be made. Spending is already under pressure given the uncertain macroeconomic environment. Longer sales cycles, shorter contracts and prioritizing spending on areas that reduce the most risk are already entrenched. Budgets are not bottomless.

A different approach to cybersecurity and building resilience is required to prevent recurring vulnerabilities and security failings from being repeatedly exploited. Building resilience requires optimizing existing cybersecurity technologies and services, not just acquiring more. Gen AI use cases have initially focused on optimization, specifically utilizing data more effectively, creating insights faster and automating and accelerating labor-intensive processes. Vendors demonstrated the capabilities of natural language requests for searching and summarizing threat intelligence feeds, investigation of incidents, SIEM data anomaly detection, event summaries and querying, SOAR playbook creation and workflow simulations, audit report generation, malware identification, and managing least-privilege policies. These are primarily designed to assist security teams in helping manage the burden of analysts’ workloads by reducing toil, up-leveling junior resources through on-the-job training, as well as improving staff retention by enabling more senior members to focus on complex areas.

In addition to gen AI assistants for security teams, vendors at RSAC 2024 also highlighted other gen AI opportunities. These included the use of gen AI for red teaming activities (using gen AI to create initial drafts of malicious emails and landing pages for social engineering testing), red teaming services for AI to identify risks posed to AI systems, including prompt injection, jailbreak and toxicity attacks, and products and services for securing the wider use of gen AI within organizations.

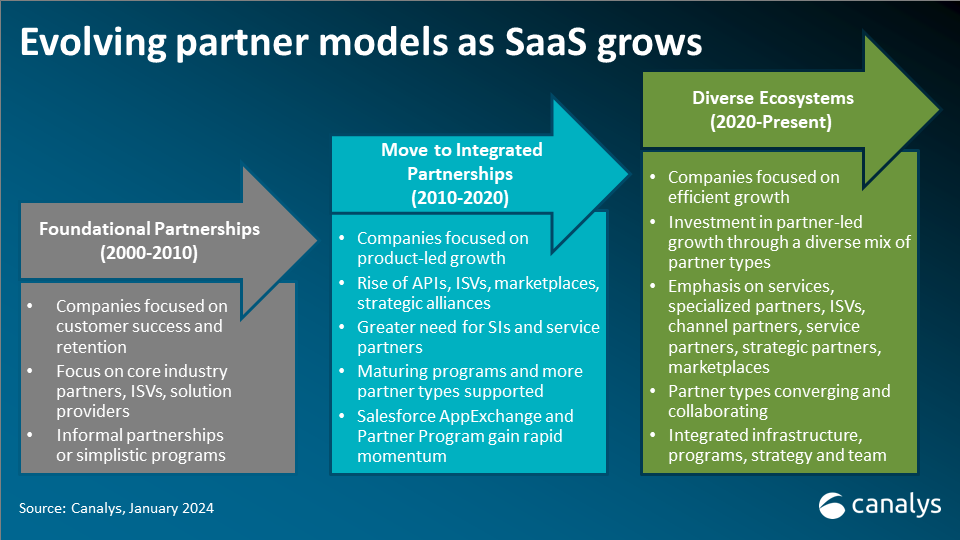

Operationalizing gen AI presents opportunities for partners

Enabling customers to operationalize gen AI by transforming security workflows will create professional service opportunities for partners. This is part of a larger gen AI opportunity for the channel ecosystem, which is forecasted to grow to US$158 billion by 2028. Initially, GSIs and other consulting-led partners will be best positioned to capitalize on customer demand through their strategic advisory and change management practices. However, other opportunities around customizing solutions and advanced data services will emerge. More transactional-led partners will benefit from cross-selling opportunities, as accessing most gen AI capabilities currently requires a premium subscription, though, over time, Canalys expects gen AI will become a standard feature of platforms. More advanced channel partners will operationalize gen AI internally to enhance their cybersecurity-managed services offering. Vendor selection will be a critical long-term business decision.

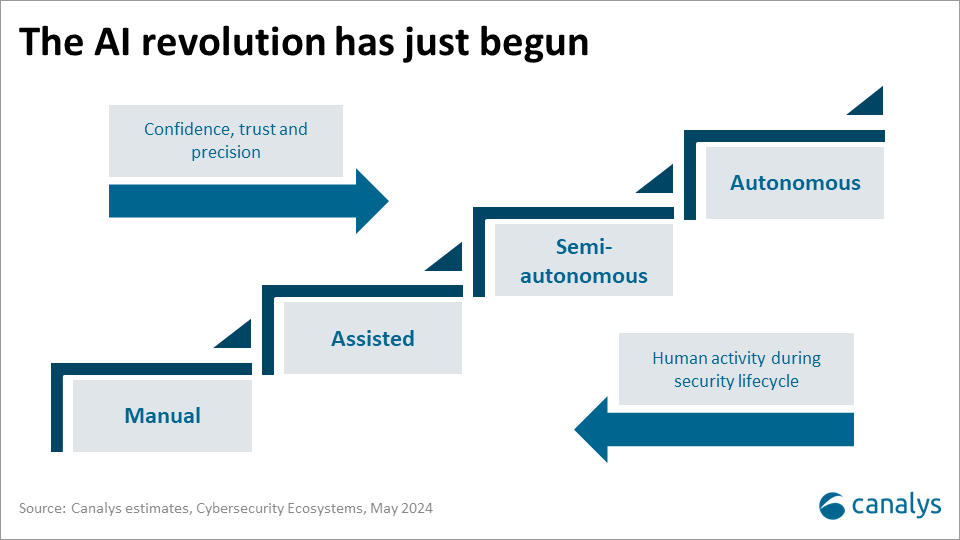

Confidence, trust and fine-tuning needed before the next stages of AI

There is a risk that gen AI becomes just another marketing checkbox for cybersecurity vendors. The initial use cases highlighted at RSAC are just the starting point, rather than a silver bullet to neutralize the threat actors. This is only the beginning of operationalizing AI for cybersecurity. It must evolve primarily from assisting security teams to being semi-autonomous and even autonomous. AI will evolve to manage the full cybersecurity lifecycle from identifying risks, protecting assets, detecting suspicious activities and responding to attacks without human intervention. Building trust and confidence in these models will take time and continuous refinement is needed before AI goes beyond the assisted stage. The exact timeline of this process is unclear, as vendors tentatively operate under intense scrutiny from governments and regulators.

However, it is a race against time to build AI systems for cybersecurity. While technology firms operate within a strengthening framework for responsible AI development, including transparency, privacy, accountability, human control and social responsibility, an underground movement of AI developers around the world is operating beyond the reach of such restraints. The prospect of more advanced and autonomous AI tools finding their way into the hands of the threat actors first is high.